Narratives in Crowdsourced Evaluation of Visualizations: A Double-Edged Sword?

Experimental Material

Evanthia Dimara, Anastasia Bezerianos and Pierre Dragicevic

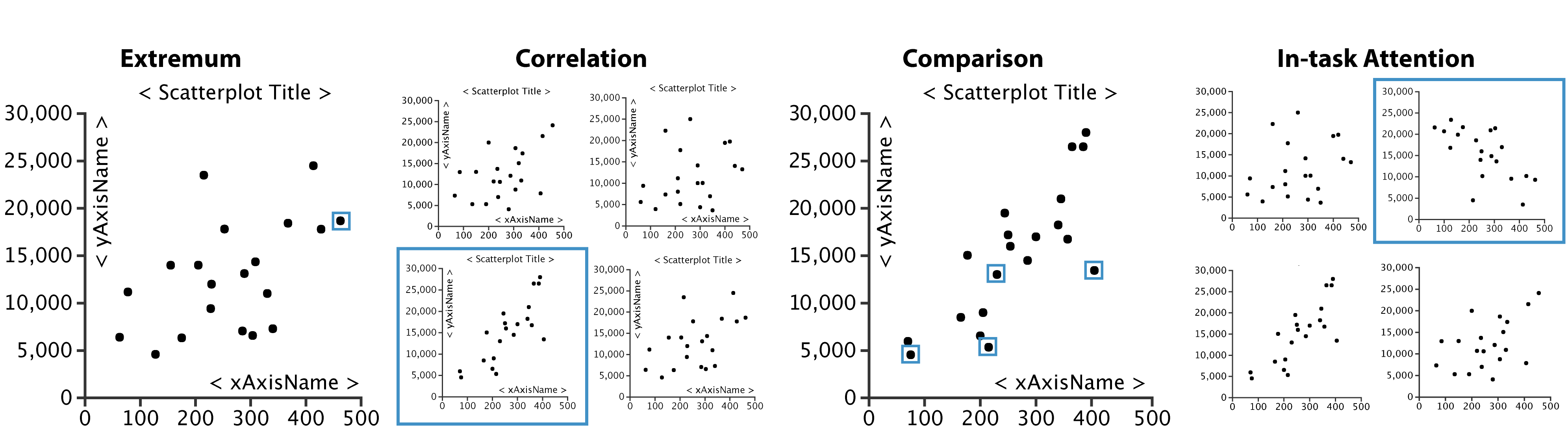

We explore the effects of providing task context when evaluating visualization tools using crowdsourcing. We gave crowdsource workers i) abstract information visualization tasks without any context, ii) tasks where we added semantics to the dataset, and iii) tasks with two types of backstory narratives: an analytic narrative and a decision-making narrative. Contrary to our expectations, we did not find evidence that adding data semantics increases accuracy, and further found that our backstory narratives may even decrease accuracy. Adding dataset semantics can however increase attention and provide subjective benefits in terms of confidence, perceived easiness, task enjoyability and perceived usefulness of the visualization. Nevertheless, backstory narratives did not appear to provide additional subjective benefits. Although these findings are preliminary, they suggest that narratives may have complex and unexpected effects, calling for more studies in this area.

Paper PDF

https://hal.inria.fr/hal-01448095/document

Stimuli and raw data

Click on the link below and follow instructions.

R code

Contact

License

| All material on this page is CC-BY-SA. You can reuse or adapt it provided you cite our paper and/or link to http://www.aviz.fr/narratives. |